Stop guessing on retrieval quality.

Automatic quality metrics for RAG. Ship retrieval changes with confidence. Catch regressions before users do.

Automatic quality metrics for RAG. Ship retrieval changes with confidence. Catch regressions before users do.

from seer import SeerClient

client = SeerClient() # reads SEER_API_KEY from env

client.log(

task="How do I reset my password?",

context=retriever.get_passages(query)

)

# Seer evaluates recall, precision, groundedness automaticallyNo labels, no annotation, no manual review. Learn more →

Evaluate every production query with zero labels. Seer understands context quality automatically.

Get alerts within minutes of quality dropping. See exactly which queries failed and why.

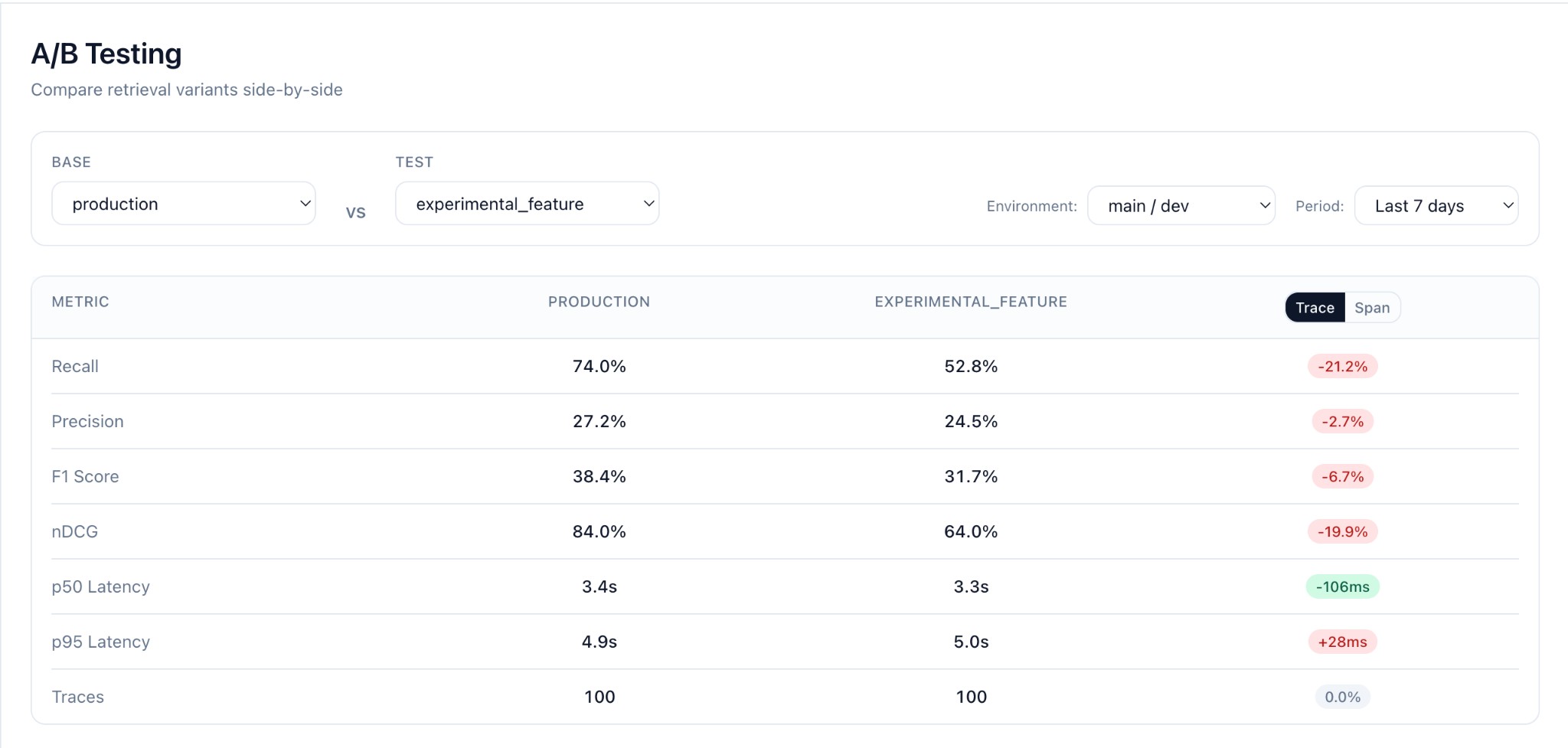

Instantly compare A/B variants with statistical confidence. Know which version wins.

38×

cheaper than GPT-5

$160/mo for 1M evals

0.87

F1 score

GPT-5 level accuracy

10s

per evaluation

vs. weeks of annotation

5

lines to start

Drop-in Python SDK

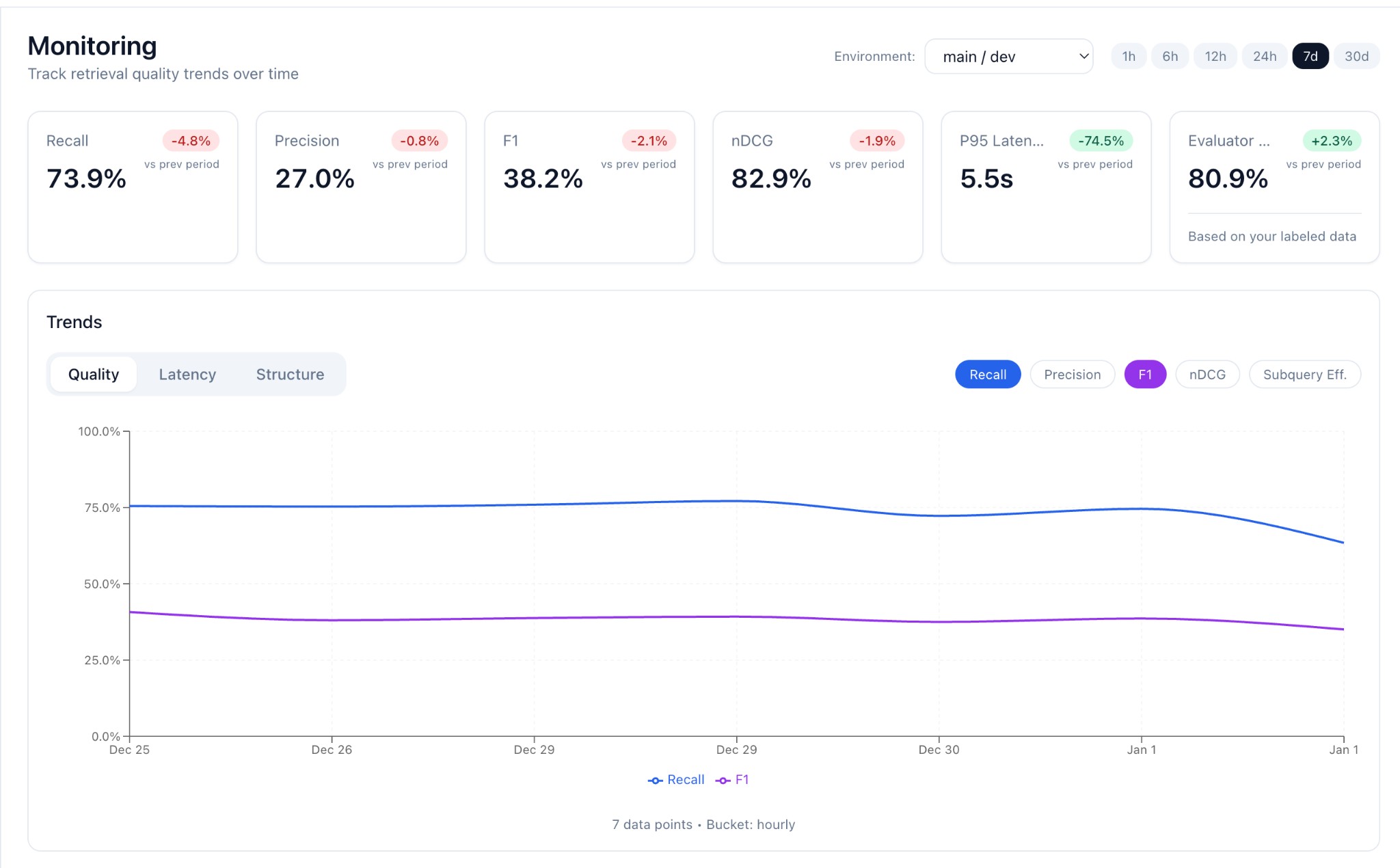

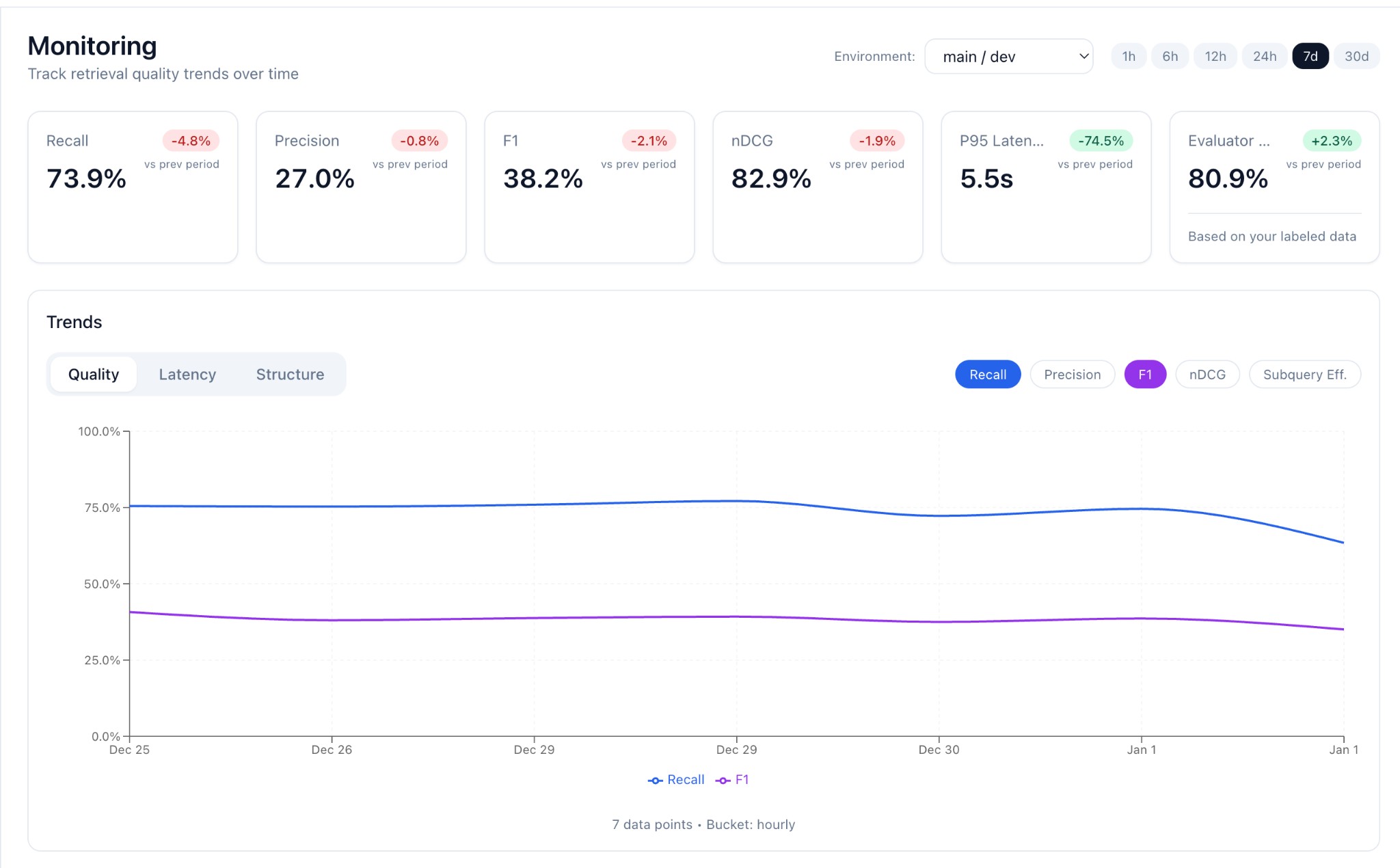

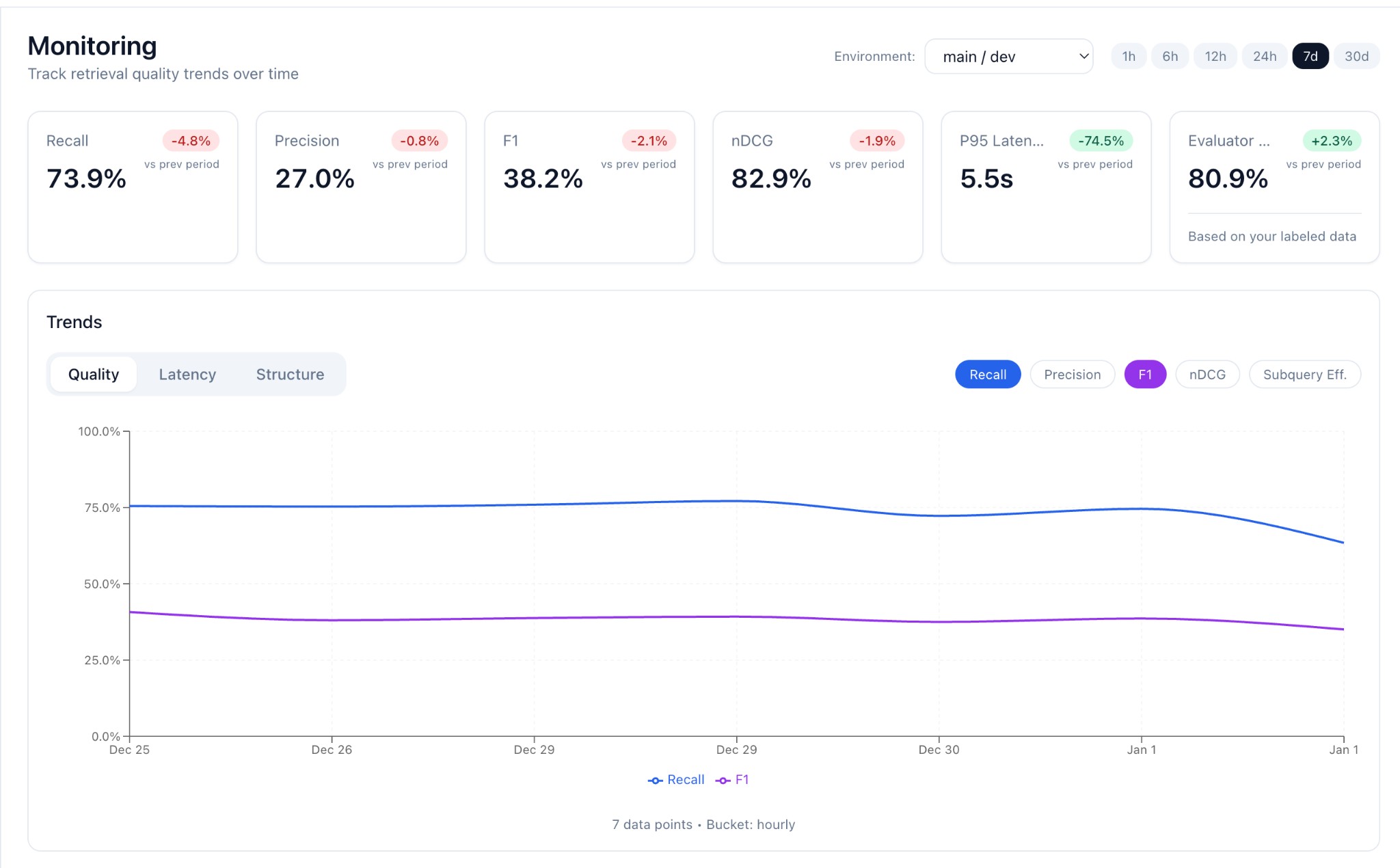

Track recall, precision, and latency across all queries. Get alerts when quality drops.

Learn how it works →

Compare retrieval variants on real traffic. See which embedding or reranker wins.

Learn how it works →Context evaluation accuracy on our benchmark dataset

| Model | Accuracy | Macro F1 | Micro F1 |

|---|---|---|---|

| Seer (Qwen3-4B) Our model | 0.777 | 0.86 | 0.87 |

| GPT-5 | 0.776 | 0.878 | 0.866 |

| GPT-5-chat | 0.750 | 0.865 | 0.848 |

| GPT-5-mini | 0.733 | 0.868 | 0.843 |

| Seer (Qwen3-1.7B) Our model | 0.661 | 0.7633 | 0.7789 |

| GPT-5-nano | 0.628 | 0.721 | 0.752 |

| Qwen3-4B | 0.481 | 0.5104 | 0.539 |

Estimated cost at different evaluation volumes

| Monthly Evals | Seer-4B | Seer-1.7B | GPT-5 | GPT-5-mini | GPT-5-nano |

|---|---|---|---|---|---|

| 100k | $16 | $2 | $606 | $121 | $24 |

| 1M | $160 | $20 | $6,063 | $1,213 | $243 |

| 10M | $1,600 | $200 | $60,625 | $12,125 | $2,425 |

Seer pricing based on hosted inference. Self-hosted options available for enterprise.

Read the docs and start logging. No sales call required.